FusionPortable V2

From Campus to Highway: A Unified Multi-Sensor Dataset for Generalized SLAM Across Diverse Platforms and Scalable Environments

News¶

- (2025-09-28) The list of Related Works that have utilized the FusionPortable dataset has been updated.

- (20250410) Some rosbags are extracted as individual files and converted into the KITTI format. Click here to try.

- (20240629) The tutoial of senosr calibration (intrinsics and extrinsics) is provided. Click here to try.

- (20240508) Groundtruth poses of all vehicle-related sequences are postprocessed: eliminate poses characterized by high uncertainty.

- (20240422) Data can be downloaded from Baidu Wang Pan with the code byj8.

- (20240414) All sequences, ground-truth trajectories, and ground-truth maps have been publicly released. If you find issues of GT trajectories and maps, please contact us or report here.

- (20240413) A small simulated navigation environment is provied.

- (20240408) The development tool has been initially released.

- (20240407) Data can be downloaded from Google Drive.

Overview¶

Usage Steps¶

- Read through the overview of the FusionPortableV2 dataset: sensors, coordinate frames, and definitions of ROS topics and message.

- Download data from this link.

- Check examples of using the dataset from this link.

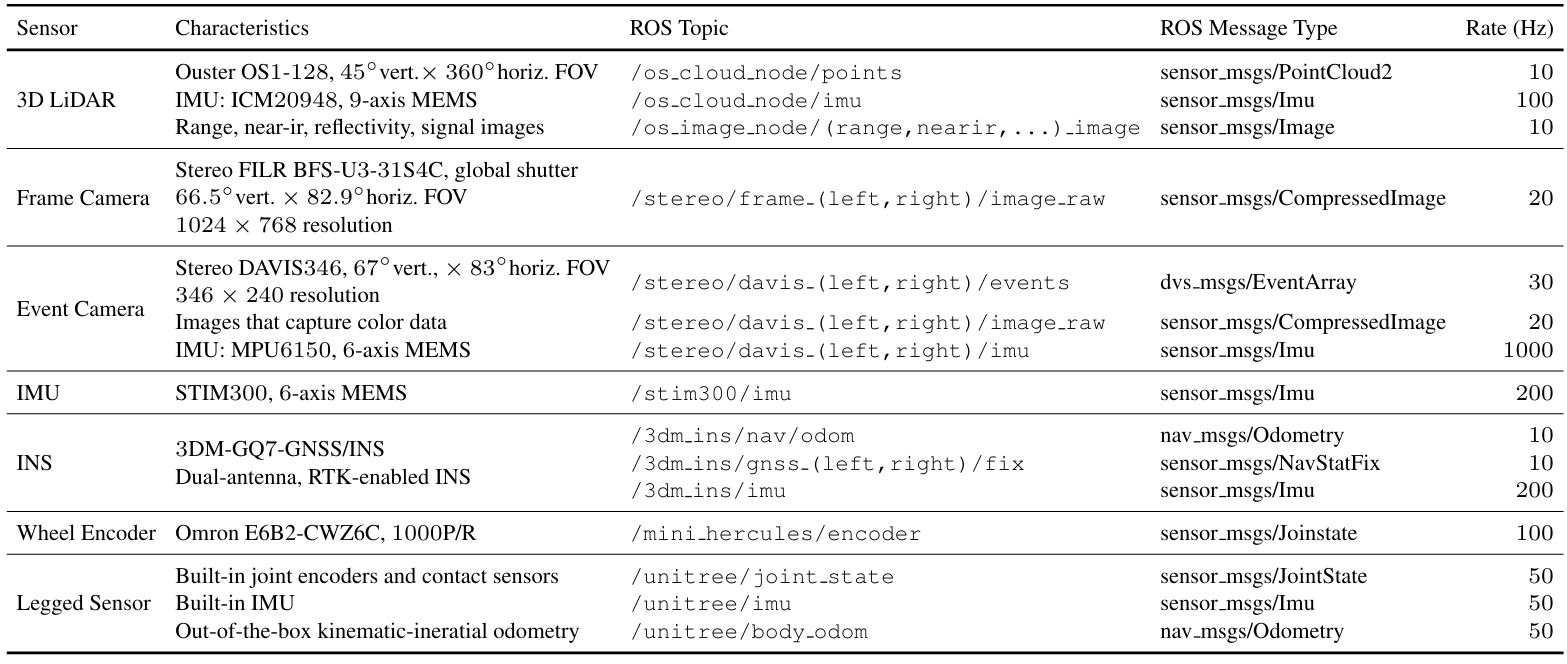

Sensors¶

- Handheld Sensor:

- 128-beam Ouster LiDAR (OS1, 120m range);

- Stereo FLIR BFS-U3-31S4C cameras;

- Stereo DAVIS346 cameras;

- STIM300 IMU;

- 3DM-GQ7-GNSS/INS

- UGV Sensor: Omron E6B2-CWZ6C wheel encoder

- Legged Robot Sensor: Built-in joint encoders, contact sensors, and IMU of the Unitree A1

Definitions of Coordinate Frame¶

| Sensor | Frame ID |

|---|---|

| 3D LiDAR (Ouster OS1-128 and its internal IMU) | ouster00, ouster00_imu |

| Left and Right Frame Camera (not vehicle-related sequeces) | frame_cam00, frame_cam01 |

| Left and Right Frame Camera (vehicle-related sequeces) | vehicle_frame_cam00, vehicle_frame_cam01 |

| Left and Right Event Camera | event_cam00, event_cam01 |

| Internal IMU of the Left and Right Event Camera | event_cam00_imu, event_cam01_imu |

| IMU (STIM300) | body_imu |

| INS (3DM-GQ7-GNSS/INS) | 3dm_imu |

| Left and Right Wheel Encoder | mini_hercules_wheel00, mini_hercules_wheel01 |

| Legged Sensor of the Unitree Quadruped Robot | unitree_body_imu, unitree_hip, unitree_thign, unitree_calf, unitree_foot |

Explanation of ROS Topic and Message¶

| ROS Message Type | Explanation | Link |

|---|---|---|

| sensor_msgs/PointCloud2 | A set of points is contained. The timestamp of this message of the last point in a scan [link] Each point is encoded with 3D coordinates (x, y, z), intensity, scanned timestamp (nanosecond), reflectivity, ring, ambient, and range |

ROS PointCloud2 message Ouster Point Definition |

| dvs_msgs/EventArray | A set of events is contained. Each event is encoded with pixel location (x, y), triggered timestamp (std_msgs/Time), and polarity |

ROS EventArray ROS Event |

| sensor_msgs/Imu | ROS Imu | |

| sensor_msgs/Image | ROS Image | |

| sensor_msgs/CompressedImage | ROS CompressedImage | |

| unitree_legged_msgs/MotorState | The customized message defined by Unitree which provided motor states. The unitree A1 has 12 motors. Details can be check at Section 1.4.1-1.4.2 of the Unitree A1 manual. |

Unitree MotorState |

| unitree_legged_msgs/LowState | The customized message defined by Unitree which provided contact states. | Unitree LowState |

| sensor_msgs/JointState | The ROS message records key information of the motor states and contact states. We have this definition of JointState: For the ith motor (i<=12, indicating FR0, FR1, FR2, FL0, FL1, FL2, RR0, RR1, RR2, RL0, RL1, RL2): JointState.position[i] = MotorState[i].q (motor current position [rad]) JointState.velocity[i] = MotorState[i].dq (motor current speed [rad/s]) JointState.effort[i] = MotorState[i].tauEst (current estimated output torque [N*m]) For the ith foot contact sensor (i<=4, indicating: FR_foot, FL_foot, RR_foot, RL_foot): JointState.effort[i+12] = LowState[i].footForce #FR0-FR2: Front-Right Hip, Front-Right Thigh, Front-Right Calf #FL0-FL2: Front-Left Hip, Front-Left Thigh, Front-Left Calf #RR0-RR2: Rear-Right Hip, Rear-Right Thigh, Rear-Right Calf #RL0-RL2: Rear-Left Hip, Rear-Left Thigh, Rear-Left Calf #FR_foot, FL_foot, RR_foot, RL_foot: Front-Right, Front-Left, Rear-Right, and Rear-Left Foot respectively | ROS JointState Code1 and Code2 to define JointState. |

| sensor_msgs/Image | ROS Image | |

| nav_msgs/Odometry | ROS Odometry | |

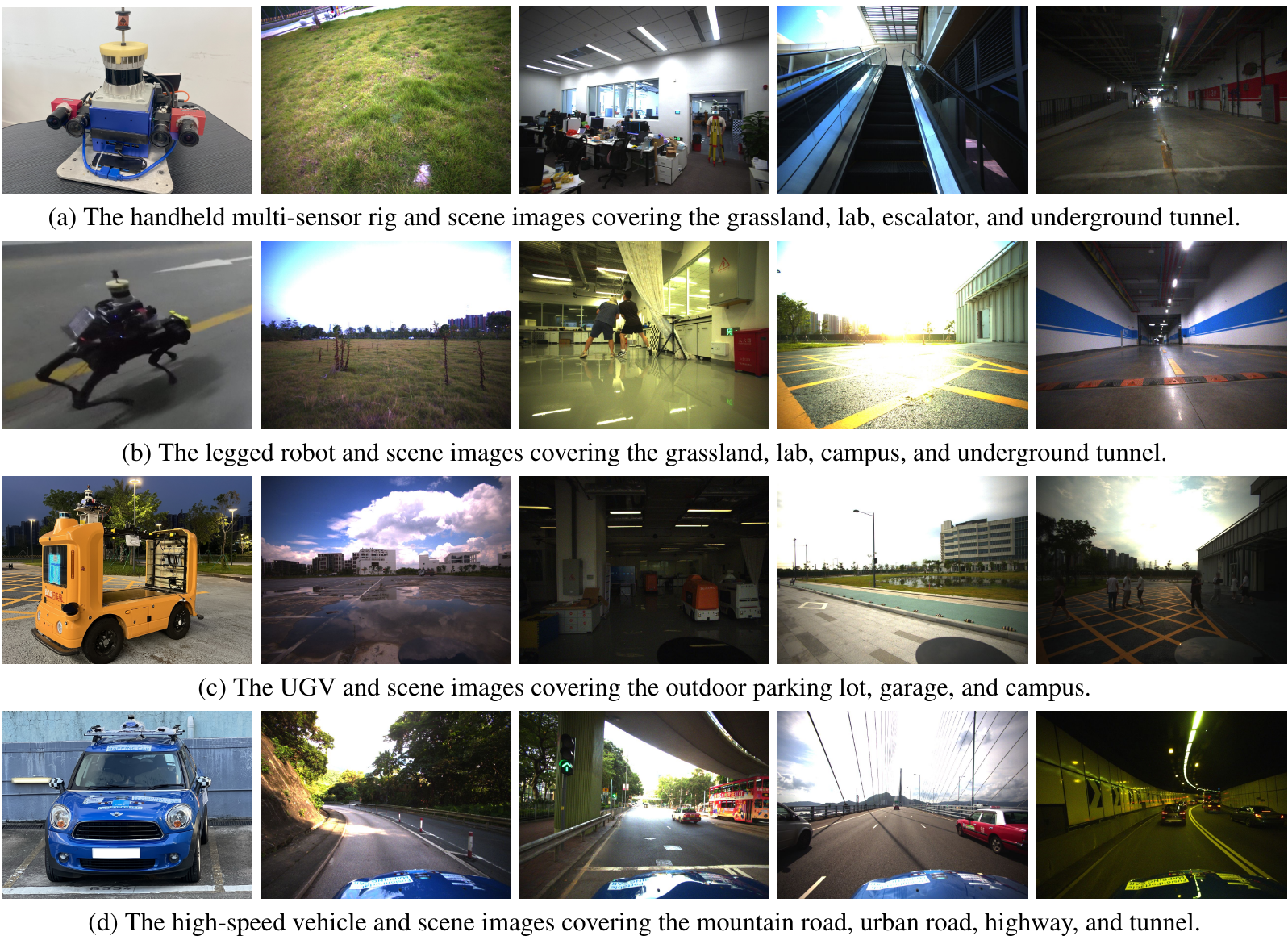

Various Platforms and Scenarios¶

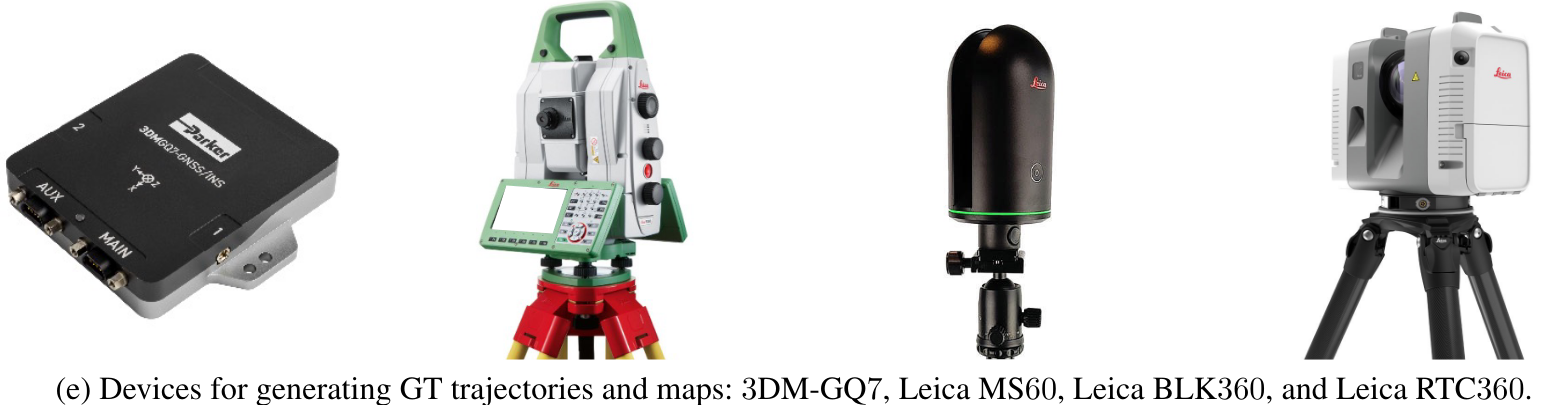

Ground-Truth Devices¶

Third-View of Data Collection¶

| Environment | Platform | Preview |

|---|---|---|

Escalator |

Handheld |

|

| Corridor | Handheld |  |

| Underground Parking Lot | Legged Robot |  |

| Campus | UGV |  |

| Outdoor Parking Lot | UGV |  |

Dataset Details and Download¶

Click the below button for downloading the dataset

Sensor Calibration Tutorial¶

Click the below button for the tutorials of sensor calibration

Sensor Calibration Files¶

Please download the correct calibration files for the sequences.

| Calibration Files | Sequences |

|---|---|

| 20230403_calib | Handheld Sequences |

| 20230426_calib | UGV Seuqneces |

| 20230618_calib | Vehicle Seuqneces |

| 20230912_calib | Legged Robot Seuqneces |

Experiments¶

Click the below button for checking experimental results

Tools¶

The development tool can be used by clicking the button below

Known Issues¶

We have listed some knowns issues in our dataset:

- Some dynamic objects exist and not removed from the ground-truth maps. If you want the ``clear'' map for experiments, I recommend you to try the maps in FusionPortable first.

If you have any other issues, please report them on the repository:

Related Works¶

The FusionPortable datasets have been used in the following papers.

Please check these works if you are interested.

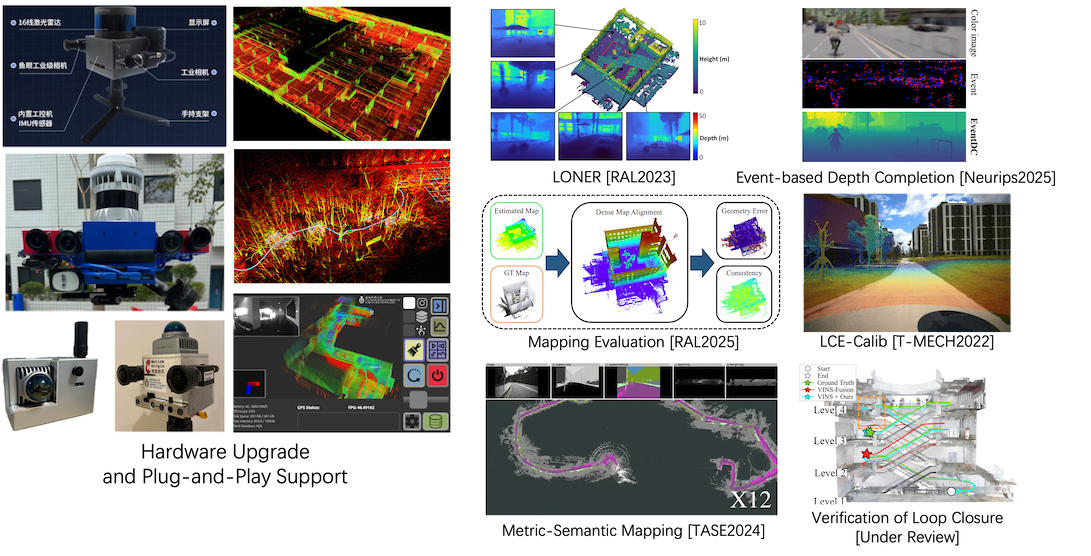

- LiDAR Only Neural Representations for Real-Time SLAM

IEEE Robotics and Automation Letter (RAL) 2023 (Best Paper Award) - CURL-SLAM: Continuous and Compact LiDAR Mapping

IEEE Transactions on Robotics (T-RO) 2025 - MapEval: Towards Unified, Robust and Efficient SLAM Map Evaluation Framework

IEEE Robotics and Automation Letter (RAL) 2025 - Event-Driven Dynamic Scene Depth Completion

Neural Information Processing Systems (NeurIPS) 2025 - Speak the Same Language: Global LiDAR Registration on BIM Using Pose Hough Transform

IEEE Transactions on Automation Science and Engineering (T-ASE) 2025 - Graph Optimality-Aware Stochastic LiDAR Bundle Adjustment with Progressive Spatial Smoothing

IEEE Transactions on Intelligent Transportation Systems (TITS) 2025 - Ms-Mapping: An Uncertainty-aware Large-Scale Multi-Session LiDAR Mapping System

Preprint - ROVER: Robust Loop Closure Verification with Trajectory Prior in Repetitive Environments

Preprint - OpenNavMap: Multi-Session Structure-Free Topometric Mapping for Visual Navigation

Preprint

Publications¶

- FusionPortableV2: A Unified Multi-Sensor Dataset for Generalized SLAM Across Diverse Platforms and Scalable Environments

Hexiang Wei*, Jianhao Jiao*, Xiangcheng Hu, Jingwen Yu, Xupeng Xie, Jin Wu, Yilong Zhu, Yuxuan Liu, etc.

The International Journal of Robotics Research (2024).

[Paper] [Arxiv]

Contact¶

- Dr. Jianhao Jiao (jiaojh1994 at gmail dot com): General problems of the dataset and Cooperation.

- Mr. Hexiang Wei (hweiak at connect dot ust dot hk): Problems related to hardware.